- * 프린트는 Chrome에 최적화 되어있습니다. print

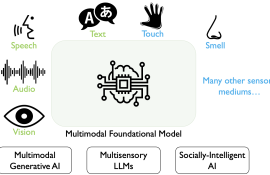

Most of today’s foundational models perceive only a small fraction of the world through language in textual form. However, text modality primarily contains high-level supervisory information created by humans. In contrast, how humans experience the world is profoundly multimodal, as we learn through sensory inputs and by discovering correlations across different modalities. When a baby eats a red shiny apple, they taste the sweet and sour flavor, smell the fresh and sweet fruity aroma, hear the crunchy sound and feel the hard texture. Through this experience, the baby learns to associate the crunch sound with the hard texture, the flavor with the red color, and the circular shape of the object, among other connections, as these associations are formed between different sensory input. True multimodal learning requires expanding the range of mediums, and consequently adopting an approach that can handle all these diverse and heterogeneous modalities. This presents a challenging next step in multimodal learning with significant potential. Such a model would be capable of learning previously unseen interactions between modalities. Furthermore, it would enable searching and retrieving information across various modalities. These learned cross-modal representations could also be leveraged in generative tasks to create diverse types of content. Most importantly, this type of system could ultimately interact with and assist humans. Some of the technical research directions our group plan to pursue include: (1) developing better methods to integrate and learn from the extreme heterogeneity across diverse modalities, (2) designing new architectural innovations that can dynamically select which modality or modalities to use for specific tasks, and (3) creating new approaches to handle the imbalance in the information content carried by different modalities. Our research group will push forward this research agenda and make significant contributions to the field of multisensory intelligence, aiming to develop AI systems that can interact with the world through a full-body experience – enhancing and augmenting human capabilities and creativity.

Major research field

Multimodal Machine Learning

Desired field of research

Multimodal Machine Learning, Audio Processing, Computer Vision, Audio-Visual Learning

Research Keywords and Topics

- Multimodal Learning

- Audio-Visual Learning

- Computer Vision

- Audio/Speech Processing

Research Publications

- Aligning Sight and Sound: Advanced Sound Source Localization Through Audio-Visual Alignment, TPAMI 2025

- Seeing Speech and Sound: Distinguishing and Locating Audios in Visual Scenes, CVPR 2025

- Sound Source Localization is All about Cross-Modal Alignment, ICCV 2023

- Learning to Localize Sound Source in Visual Scenes: Analysis and Applications, TPAMI 2021

- Learning to Localize Sound Source in Visual Scenes, CVPR 2028